Using CloudFront with the Serverless Framework

Check out the following resources for examples of using the Serverless Framework with Amazon CloudFront:

- Single page web app with Serverless Framework and CloudFront

- Generating S3 signed URLs using Lambda@Edge and Serverless Framework

- Setting up a custom domain name for AWS Lambda and Amazon API Gateway using CloudFront and Serverless Framework

- Using the Serverless Next.js component with CloudFront and Lambda@Edge

Other resources

- Using Lambda@Edge with the Serverless Framework — CloudFront event documentation

- Serverless Framework — AWS provider documentation

- CloudFront documentation — AWS

- AWS Lambda — The Ultimate Guide

- Amazon S3 — The Ultimate Guide

Quickly replicate your infrastructure

If your application requires additional availability, you might replicate it in

multiple regions so that if one region becomes unavailable, your users can still use

your application in other regions. The challenge in replicating your application is

that

it also requires you to replicate your resources. Not only do you need to record all

the

resources that your application requires, but you must also provision and configure

those resources in each region.

Reuse your CloudFormation template to create your resources in a consistent and repeatable

manner. To reuse your template, describe your resources once and then provision the

same

resources over and over in multiple regions.

How does CloudFront work?

CloudFront acts as a distributed cache for your files, with cache locations around the world. It fetches your files from their source location (“origin” in CloudFront terms) and places the copies of the files in different edge locations across the Americas, Europe, Asia, Africa, and Oceania. In so doing, CloudFront speeds up access to your files for your end users.

Why is this worth doing? Imagine, for example, that your data origin is located in Brazil and one of your customers in Japan would like to access this data. Without CloudFront (or a similar solution) this customer would need to send a request to the other side of the world, transferring the files from a very distant location. This would result in a request that’s slow to arrive to the destination as well as a slow file download. Having your customers wait longer to get data often makes for a poor customer experience.

With CloudFront, however, the files are periodically fetched by CloudFront system from the location in Brazil and placed onto a set of servers around the world, including one in Japan. When a user in Japan goes to download the files, the request will now be served by a nearby server with lower latency, a higher download speed, and a better customer experience.

(Note: a distributed cache model is just one approach to building a CDN; we discuss other approaches in the CloudFront alternatives section in this article.)

Получить изображение из Origin сервера и хранить его в S3

Предположим, у вас есть медиа-сервер, что хосты все изображения, необходимые для вашего сайта и предполагают, что уведомления сервера триггеры при обновлении существующего изображения или добавления нового изображения. Эти изображения могут быть получены, преобразованы и сохранены в ведре S3.

Рисунок 1:Получить изображение из Origin сервера с помощью Lambda

На рисунке 1 показана функция АМС Лямбда, который извлекает исходное изображение с сервера медиа, преобразует его в ведро хранения S3. Он также слушал очередь сообщений, чтобы получить обновление изображения, отпущенное Media Server и обновление изображения в S3.

Easily control and track changes to your infrastructure

In some cases, you might have underlying resources that you want to upgrade

incrementally. For example, you might change to a higher performing instance type

in

your Auto Scaling launch configuration so that you can reduce the maximum number of

instances in

your Auto Scaling group. If problems occur after you complete the update, you might

need to roll

back your infrastructure to the original settings. To do this manually, you not only

have to remember which resources were changed, you also have to know what the original

settings were.

When you provision your infrastructure with CloudFormation, the CloudFormation template

describes exactly what resources are provisioned and their settings. Because these

templates are text files, you simply track differences in your templates to track

changes to your infrastructure, similar to the way developers control revisions to

source code. For example, you can use a version control system with your templates

so

that you know exactly what changes were made, who made them, and when. If at any point

you need to reverse changes to your infrastructure, you can use a previous version

of

your template.

Using wildcards in alternate domain names

When you add alternate domain names, you can use the * wildcard at the beginning of

a

domain name instead of adding subdomains individually. For example, with an

alternate domain name of *.example.com, you can use any domain name that ends

with

example.com in your URLs, such as www.example.com, product-name.example.com,

marketing.product-name.example.com, and so on. The path to the object is the same

regardless of the domain name, for example:

-

www.example.com/images/image.jpg

-

product-name.example.com/images/image.jpg

-

marketing.product-name.example.com/images/image.jpg

Follow these requirements for alternate domain names that include wildcards:

-

The alternate domain name must begin with an asterisk and a dot (*.).

-

You cannot use a wildcard to replace part of a subdomain name, like this:

*domain.example.com. -

You cannot replace a subdomain in the middle of a domain name, like this:

subdomain.*.example.com. -

All alternate domain names, including alternate domain names that use wildcards,

must be covered by the subject alternative name (SAN) on the certificate.

A wildcard alternate domain name, such as *.example.com, can include another alternate

domain name that’s in use, such as example.com.

Permissions required to configure standard logging and to access your log files

Important

Don’t choose an Amazon S3 bucket with S3 Object

Ownership set to bucket owner enforced.

That setting disables ACLs for the bucket and the objects in it, which prevents

CloudFront

from delivering log files to the bucket.

Your AWS account must have the following permissions for the bucket that you specify

for log files:

-

The S3 access control list (ACL) for the bucket must grant you . If you’re the bucket owner,

your account has this permission by default. If you’re not, the bucket owner

must update the ACL for the bucket.

Note the following:

- ACL for the bucket

-

When you create or update a distribution and enable logging, CloudFront uses these

permissions to

update the ACL for the bucket to give the

account permission. The

account writes log files to the bucket. If your

account doesn’t have the required permissions to update the ACL, creating or

updating the distribution will fail.In some circumstances, if you programmatically submit a request to create a bucket

but a bucket with the specified name already exists,

S3 resets permissions on the bucket to the default value. If you configured

CloudFront to save access logs in an S3 bucket and you stop getting logs

in that bucket, check permissions on the bucket to ensure that CloudFront has

the necessary permissions. - Restoring the ACL for the bucket

-

If you remove permissions for the account, CloudFront won’t be able to

save logs to the S3 bucket. To enable CloudFront to start saving logs for your

distribution again, restore the ACL permission by doing one of the

following:-

Disable logging for your distribution in CloudFront, and then enable it again. For

more information,

see Values That You Specify When You Create or Update a Distribution. -

Add the ACL permission for manually by navigating to the S3

bucket in the Amazon S3 console and adding permission. To add the ACL for

, you must provide the canonical ID for

the account, which is the following:For more information about adding ACLs to S3 buckets, see How Do I Set ACL Bucket Permissions? in

the Amazon Simple Storage Service User Guide.

-

- ACL for each log file

-

In addition to the ACL on the bucket, there’s an ACL on each log file. The bucket

owner has

permission on each log file, the distribution

owner (if different from the bucket owner) has no permission, and the

account has read and write permissions. - Disabling logging

-

If you disable logging, CloudFront doesn’t delete the ACLs for either the bucket or

the log files. If you want,

you can do that yourself.

Usage

IMPORTANT: We do not pin modules to versions in our examples because of the

difficulty of keeping the versions in the documentation in sync with the latest released versions.

We highly recommend that in your code you pin the version to the exact version you are

using so that your infrastructure remains stable, and update versions in a

systematic way so that they do not catch you by surprise.

Also, because of a bug in the Terraform registry (hashicorp/terraform#21417),

the registry shows many of our inputs as required when in fact they are optional.

The table below correctly indicates which inputs are required.

For a complete example, see examples/complete.

For automated tests of the complete example using bats

and Terratest (which tests and deploys the example on AWS), see test.

Complete example of setting up CloudFront Distribution with Cache Behaviors for a WordPress site:

Cloudflare vs Amazon CloudFront: A Recap

To sum up, both Cloudflare and Amazon CloudFront offer content delivery network functionality that can speed up your website’s global page load times and reduce the load on your server.

Cloudflare is a reverse proxy which means, in part, that you’ll use Cloudflare’s nameservers and Cloudflare will actually handle directing traffic for your site. This also comes with other benefits, like security and DDoS protection.

Amazon CloudFront, on the other hand, is more of a “traditional” CDN. You won’t need to change your nameservers. Instead, you can either have CloudFront automatically “pull” files from your WordPress site’s server onto CloudFront’s servers, or you can use a plugin like WP Offload Media Lite to “push” files into an Amazon S3 bucket and have CloudFront serve them from there.

Of the two, Cloudflare has a much simpler setup process and will make the best option for most WordPress users who don’t need detailed control over how the CDN cache works or have unique situations like live streaming content.

Finally, no matter which content delivery network you choose, WP Rocket can help you with the setup process, either through its CDN features or its dedicated Cloudflare integration.

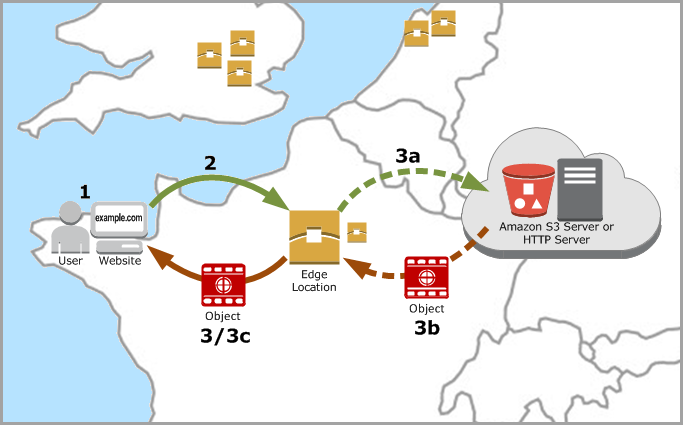

How CloudFront delivers content to your users

After you configure CloudFront to deliver your content, here’s what happens when users

request

your files:

-

A user accesses your website or application and requests one or more files, such as

an image file and an HTML file.

-

DNS routes the request to the CloudFront POP (edge location) that can best serve the

request—typically the nearest CloudFront POP in terms of latency—and routes the

request to that edge location.

-

In the POP, CloudFront checks its cache for the requested files. If the files are

in the cache, CloudFront returns them to the user. If the files are not in

the cache, it does the following:-

CloudFront compares the request with the specifications in your distribution and

forwards the request for the files to your origin server for the corresponding

file

type—for example, to your Amazon S3 bucket for image files and to

your HTTP server for HTML files. -

The origin servers send the files back to the edge location.

-

As soon as the first byte arrives from the origin, CloudFront begins to forward the

files to the user. CloudFront also adds the files to the cache in the edge

location for the

next time someone requests those files.

-

Cloudflare vs CloudFront: The Basic Differences

Ok, so both Cloudflare and CloudFront are CDNs. However, beyond that, there’s a big difference in how they function.

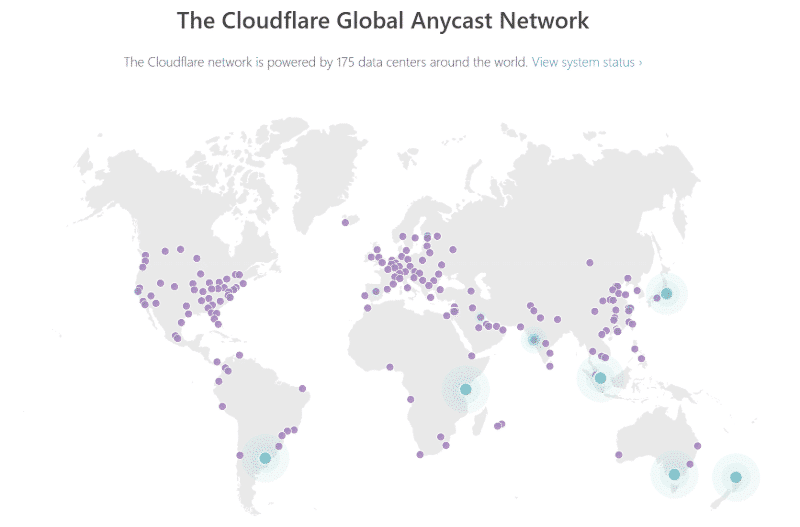

Cloudflare Explained

Cloudflare is actually a reverse proxy. In part, that means that, when you set up your site with Cloudflare, you’ll actually point your domain’s nameservers to Cloudflare.

Then, Cloudflare will direct all the traffic to your site. This gives Cloudflare a lot of control over your site, and this is also what allows Cloudflare to offer extra functionality beyond its CDN (more on this in a second).

Here’s the process in a little more detail:

When someone visits your site, Cloudflare will take your static content and store your content on Cloudflare’s network of servers around the world. Then, for future visitors, Cloudflare can serve up that cached static content from the Cloudflare edge server that’s nearest to each visitor.

Because of how this approach works, all of your content will still load from yoursite.com. This is different from how a lot of other CDNs work, where it’s common to serve your content from a separate URL like cdn.yoursite.com.

Beyond its CDN functionality, Cloudflare also has a number of other benefits on its free plan including:

- Free shared SSL certificate

- DDoS protection

If you’re willing to pay for a premium plan, you can also add on functionality like:

- Web application firewall (WAF)

- Image and mobile optimization

- More control over security and your CDN

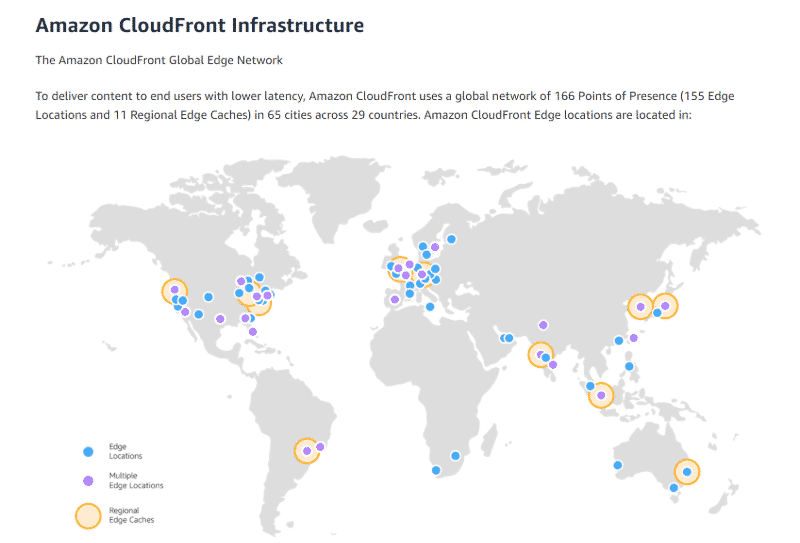

Amazon CloudFront Explained

Amazon CloudFront, on the other hand, is more of a “traditional” CDN. That is, you don’t need to change your nameservers to CloudFront like you do with Cloudflare’s reverse proxy approach.

Instead, CloudFront will automatically “pull” the data from your origin server onto CloudFront’s network of servers around the world. It’s also possible to “push” your content on to CloudFront – more on this later.

However, because CloudFront isn’t controlling your nameservers like Cloudflare does, CloudFront cannot automatically make your WordPress site serve up content from a different edge server.

That’s where the separate URL comes in.

Using something like cdn.yoursite.com, you’ll rewrite the URLs of the static content on your site so that they load content from cdn.yoursite.com (the nearest CloudFront edge server) instead of yoursite.com (your origin server).

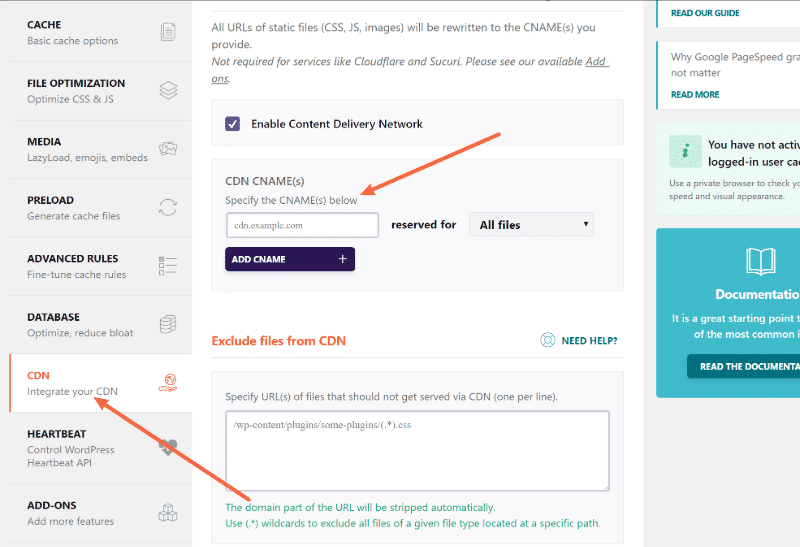

This is what the CDN tab helps you do in WP Rocket – you can enter the URL of your CDN and choose which files it should apply to (and even manually exclude certain files from being served via the CDN):

CloudFront is also part of the whole Amazon Web Services (AWS) ecosystem, which makes it convenient if you’re using other AWS services (like Amazon S3).

CloudFront offers 50 GB of free data transfer for one year. After that, you’ll pay per GB of data transfer.

Should You Use Cloudflare or CloudFront on WordPress?

Most WordPress users will be better suited by Cloudflare because:

- It has a simpler setup process than Amazon CloudFront

- The free plan will fit the needs of most WordPress users

- WP Rocket offers a dedicated Cloudflare integration

- Cloudflare has a slightly larger network of edge servers, though the difference is small

- Cloudflare does “more” than just content delivery, with lots of beneficial security features as well

That’s certainly not to say that Cloudflare is always better than Amazon CloudFront. It’s just that a lot of CloudFront’s benefits aren’t things that most WordPress users will care about.

For example, CloudFront gives you more control over nitty-gritty details like HTTP headers and cache invalidation, and CloudFront also works with live streaming content.

However, most WordPress users won’t need that functionality, and will be better served by the simplicity of Cloudflare.

Security & Compliance

Security scanning is graciously provided by Bridgecrew. Bridgecrew is the leading fully hosted, cloud-native solution providing continuous Terraform security and compliance.

| Benchmark | Description |

|---|---|

| Infrastructure Security Compliance | |

| Center for Internet Security, KUBERNETES Compliance | |

| Center for Internet Security, AWS Compliance | |

| Center for Internet Security, AZURE Compliance | |

| Payment Card Industry Data Security Standards Compliance | |

| National Institute of Standards and Technology Compliance | |

| Information Security Management System, ISO/IEC 27001 Compliance | |

| Service Organization Control 2 Compliance | |

| Center for Internet Security, GCP Compliance | |

| Health Insurance Portability and Accountability Compliance |

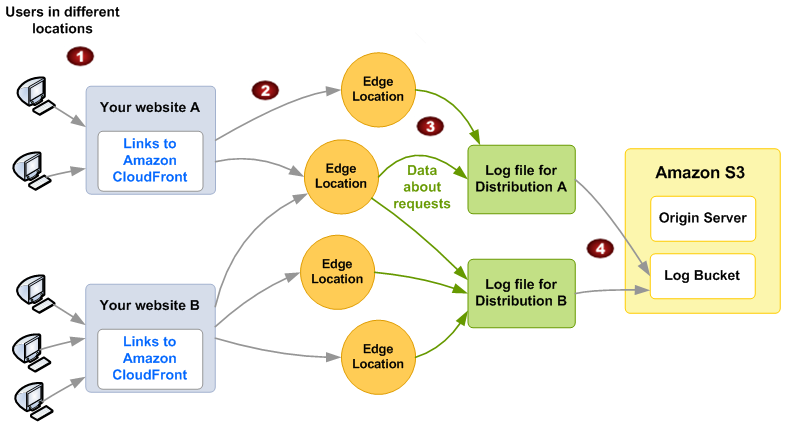

How standard logging works

The following diagram shows how CloudFront logs information about requests for your

objects.

The following explains how CloudFront logs information about requests for your objects,

as

illustrated in the previous diagram.

-

In this diagram, you have two websites, A and B, and two corresponding CloudFront

distributions. Users request your objects

using URLs that are associated with your distributions. -

CloudFront routes each request to the appropriate edge location.

-

CloudFront writes data about each request to a log file specific to that distribution.

In this example, information about requests

related to Distribution A goes into a log file just for Distribution A, and information

about requests related to

Distribution B goes into a log file just for Distribution B. -

CloudFront periodically saves the log file for a distribution in the Amazon S3 bucket

that you specified when you enabled logging.

CloudFront then starts saving information about subsequent requests in a new

log file for the distribution.

If no users access your content during a given hour, you don’t receive any log files

for that hour.

Each entry in a log file gives details about a single request. For more information

about log file format, see

.

Important

We recommend that you use the logs to understand the nature of the requests for

your content, not as a complete accounting of all requests. CloudFront delivers

access

logs on a best-effort basis. The log entry for a particular request might be

delivered long after the request was actually processed and, in rare cases, a

log

entry might not be delivered at all. When a log entry is omitted from access logs,

the number of entries in the access logs won’t match the usage that appears in

the

AWS usage and billing reports.

DevOps Accelerator for Startups

We deliver 10x the value for a fraction of the cost of a full-time engineer. Our track record is not even funny. If you want things done right and you need it done FAST, then we’re your best bet.

- Reference Architecture. You’ll get everything you need from the ground up built using 100% infrastructure as code.

- Release Engineering. You’ll have end-to-end CI/CD with unlimited staging environments.

- Site Reliability Engineering. You’ll have total visibility into your apps and microservices.

- Security Baseline. You’ll have built-in governance with accountability and audit logs for all changes.

- GitOps. You’ll be able to operate your infrastructure via Pull Requests.

- Training. You’ll receive hands-on training so your team can operate what we build.

- Questions. You’ll have a direct line of communication between our teams via a Shared Slack channel.

- Troubleshooting. You’ll get help to triage when things aren’t working.

- Code Reviews. You’ll receive constructive feedback on Pull Requests.

- Bug Fixes. We’ll rapidly work with you to fix any bugs in our projects.

Amazon CloudFront Alternatives

CloudFront is the only CDN service offered by AWS, but there are other services from Amazon and from other providers that you could use to achieve the same goal—giving your users fast access to your static files at scale.

Amazon S3 Amazon S3 is a cloud file-storage solution from Amazon, and while it’s not a CDN like CloudFront, it can work well for the purpose of distributing files to your end users. With both CloudFront and S3 alike, the core of the cost is the data transfer from file storage to your end users. S3 can be cheaper, since you’ll only pay for data transfer from your region of origin, while with CloudFront you’ll be paying for data transfer in all regions where your users access the files. This can of course include regions where data transfer is very expensive, for example, South America or Australia.

But using S3 will also likely be slower for your end users, unless they all happen to be located near the region where your S3 bucket is hosted.

In short, using S3 directly (without CloudFront) can be a good option for cases where a small amount of extra latency is acceptable for your users.

Google Cloud CDN Google Cloud CDN offers very similar functionality to that of CloudFront. The key difference is the pricing structure: Google Cloud CDN exposes more detail about the cache structure and charges for cache lookups, cache fill data transfer, and cache egress data transfer. The total cost of using Google Cloud CDN should be in the same range as CloudFront, as most of the cost still lies in the data transfer charges, and data transfer is priced similarly for both services. The extra visibility you get into the details of how Google Cloud CDN works through their pricing model can allow you to reduce the CDN’s cost and optimize its performance.

CloudFlare CDN CloudFlare is a company that provides CDN and related solutions. The technical implementation of their CDN solution is different from that of CloudFront and Google Cloud CDN: CloudFlare is built as a reverse proxy. CloudFlare’s customers point the name servers for their domain(s) to CloudFlare, whose CDN service then becomes the primary endpoint for all HTTP or HTTPS requests each domain receives. CloudFlare retrieves any files it’s missing from the customer’s backend server.

This method differs from CloudFront’s, in which you configure an explicit set of files to be available in a CloudFront distribution and let the CloudFront infrastructure periodically fetch the up-to-date versions of the content for you.

CloudFlare CDN has a different pricing structure: rather than paying for the traffic out to the public internet, you pay a flat monthly fee plus a usage fee based on the number of DNS requests made to your site.

When using CloudFlare, you have access to fewer configuration options than in CloudFront. But in more conventional use cases, such as caching a website, using CloudFlare can result in a site that’s equally fast while paying smaller CDN bills.

Usage

Configuring CloudFront

-

Create a CloudFront distribution

-

Configure your origin with the following settings:

Origin Domain Name: {your-s3-bucket}Restrict Bucket Access: YesGrant Read Permissions on Bucket: Yes, Update Bucket Policy

API

- — Domain name of your Cloudfront distribution

- — Path to s3 object

- — URL signature

- — RTMP formatted server path

- — Signed RTMP formatted stream name

- — Cloudfront URL to sign

- — URL signature

- — Signed AWS cookies

-

(Optional — Default: 1800 sec == 30 min) — The time when the URL should expire. Accepted values are

- number — Time in milliseconds ()

- Date — Javascript Date object ()

-

(Optional) — IP address range allowed to make GET requests

for your signed URL. This value must be given in standard IPv4 CIDR format

(for example, 10.52.176.0/24). -

— The access key ID from your Cloudfront keypair

-

|| — The private key from your Cloudfront

keypair. It can be provided as either a string or a path to the .pem file.

Note: When providing the private key as a string, ensure that the newline

character is also included.var privateKeyString = '-----BEGIN RSA PRIVATE KEY-----\n' 'MIIJKAIBAAKCAgEAwGPMqEvxPYQIffDimM9t3A7Z4aBFAUvLiITzmHRc4UPwryJp\n' 'EVi3C0sQQKBHlq2IOwrmqNiAk31/uh4FnrRR1mtQm4x4IID58cFAhKkKI/09+j1h\n' 'tuf/gLRcOgAXH9o3J5zWjs/y8eWTKtdWv6hWRxuuVwugciNckxwZVV0KewO02wJz\n' 'jBfDw9B5ghxKP95t7/B2AgRUMj+r47zErFwo3OKW0egDUpV+eoNSBylXPXXYKvsL\n' 'AlznRi9xNafFGy9tmh70pwlGG5mVHswD/96eUSuLOZ2srcNvd1UVmjtHL7P9/z4B\n' 'KdODlpb5Vx+54+Fa19vpgXEtHgfAgGW9DjlZMtl4wYTqyGAoa+SLuehjAQsxT8M1\n' 'BXqfMJwE7D9XHjxkqCvd93UGgP+Yxe6H+HczJeA05dFLzC87qdM45R5c74k=\n' '-----END RSA PRIVATE KEY-----'

Also, here are some examples if prefer to store your private key as a string

but within an environment variable.# Local env example CF_PRIVATE_KEY="$(cat your-private-key.pem)" # Heroku env heroku config:set CF_PRIVATE_KEY="$(cat your-private-key.pem)"

Создание статического сайта на AWS S3

Войдите в свой аккаунт AWS и создайте корзину (bucket) с именем www.mystaticsite77.ru (обязательно укажите www в начале имени). Выберите регион, в котором должны храниться ваши файлы.

На вкладке Properties включите функцию хостинга статического сайта (Static website hosting).

Укажите имя основного файла (index.html) и страницу, которую нужно отображать при ошибке (404.html). Сохраните изменения

Обратите внимание на URL (Endpoint), сгенерированного AWS. Скопируйте его

Чтобы открыть общий доступ к это корзине, нужно изменить политику на вкладке Permissions -> Bucket Policy

Загрузите новую статическую страницу HTML в созданную корзину (Object->Upload).

Теперь сайт должен быть доступен по длинному endpoint bucket URL, который выглядит примерно так: http://www.mystaticsite77.ru.s3-website-us-east-1.amazonaws.com

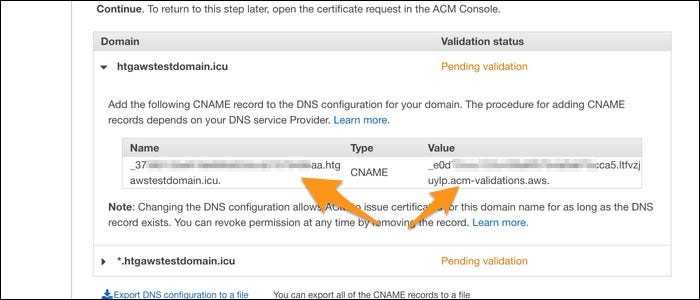

Запрос сертификата от ACM

Если вы планируете использовать свой собственный URL (а не ) вам потребуется запросить новый сертификат SSL / TLS в диспетчере сертификатов AWS (ACM). По какой-то причине нет способа обновить раскрывающийся список для выбора сертификата при настройке дистрибутива CloudFront, поэтому вам придется предварительно запросить этот сертификат.

Стоит отметить, что на самом деле это совершенно бесплатный SSL — услуга, за которую многие компании будут взимать сотни долларов. В то время как LetsEncrypt предлагает бесплатный SSL, его необходимо обновлять каждые несколько месяцев с помощью задания cron (предоставляется, оно настраивается автоматически, поэтому это не является большой проблемой). Сертификаты, предоставляемые с ACM, однако будут автоматически продлеватьи никогда не истекает, пока они используются.

Перейдите к консоли управления ACM и предоставьте новый общедоступный сертификат. Введите доменные имена, которые вы будете использовать (например, , а также ).

Для каждого домена вы должны подтвердить, что являетесь его владельцем, создав новую запись CNAME в DNS. Если вы используете собственный маршрутизатор 53 DNS от AWS, нажмите кнопку, чтобы автоматически создать эти записи.

Это может занять несколько минут для обработки. После проверки вы увидите, что оранжевый «Ожидание проверки» станет зеленым «Выпущено», и вы сможете перейти к настройке CloudFront.

Properties

-

Note

In CloudFormation, this field name is . Note the different

capitalization.If the distribution uses (alternate domain names or CNAMEs) and

the SSL/TLS certificate is stored in

AWS Certificate Manager (ACM), provide the Amazon Resource

Name (ARN) of the ACM certificate. CloudFront only supports ACM certificates in

the US

East (N. Virginia) Region ().If you specify an ACM certificate ARN, you must also specify values for

and .

(In CloudFormation, the field name is . Note the different

capitalization.)Required: Conditional

Type: String

Update requires:

-

If the distribution uses the CloudFront domain name such as

, set this field to .If the distribution uses (alternate domain names or CNAMEs), set

this field to and specify values for the following fields:-

or (specify a value for one,

not both)In CloudFormation, these field names are

and . Note the

different capitalization. -

(In CloudFormation, this field name is

. Note the different

capitalization.)

Required: Conditional

Type: Boolean

Update requires:

-

-

Note

In CloudFormation, this field name is . Note the different

capitalization.If the distribution uses (alternate domain names or CNAMEs) and

the SSL/TLS certificate is stored in

AWS Identity and Access Management (IAM), provide the ID of the IAM

certificate.If you specify an IAM certificate ID, you must also specify values for

and . (In CloudFormation, the field name is . Note the

different capitalization.)Required: Conditional

Type: String

Update requires:

-

If the distribution uses (alternate domain names or CNAMEs),

specify the security policy that you want CloudFront to use for HTTPS connections

with

viewers. The security policy determines two settings:-

The minimum SSL/TLS protocol that CloudFront can use to communicate with

viewers. -

The ciphers that CloudFront can use to encrypt the content that it returns to

viewers.

For more information, see and in the Amazon CloudFront Developer Guide.

Note

On the CloudFront console, this setting is called Security

Policy.When you’re using SNI only (you set to ),

you must specify or higher. (In CloudFormation, the

field name is . Note the different

capitalization.)If the distribution uses the CloudFront domain name such as

(you set

to ), CloudFront automatically sets

the security policy to regardless of the value that you set

here.Required: Conditional

Type: String

Allowed values:

Update requires:

-

-

Note

In CloudFormation, this field name is . Note the different

capitalization.If the distribution uses (alternate domain names or CNAMEs), specify

which viewers the distribution accepts HTTPS connections from.-

– The distribution accepts HTTPS connections from only viewers that

support server

name indication (SNI). This is recommended. Most browsers and clients support SNI. -

– The distribution accepts HTTPS connections from all viewers including

those that don’t support SNI. This is not recommended, and results in additional

monthly charges from CloudFront. -

— Do not specify this value unless your distribution

has been enabled for this feature by the CloudFront team. If you have a use case

that requires static IP addresses for a distribution, contact CloudFront through

the

AWS Support Center.

If the distribution uses the CloudFront domain name such as

, don’t set a value for this field.Required: Conditional

Type: String

Allowed values:

Update requires:

-

How does CloudFront integrate with other AWS services?

To speak of CloudFront being integrated with another AWS service chiefly refers to CloudFront’s ability to use that service as a data source for distribution. These integrations include:

Amazon S3. It’s possible (and in fact very common) to use an Amazon S3 bucket as a source from which CloudFront will request files before placing them in its edge locations. This works both with standard S3 buckets and with buckets configured as website endpoints. When CloudFront is configured, the S3 bucket can continue to be used with no changes.

Amazon EC2. CloudFront supports using an Amazon EC2 server or an Elastic Load Balancing endpoint as an origin for files in a CloudFront distribution. CloudFront’s support for custom HTTP/HTTPS origins is what enables this integration, meaning that it’s also possible to use a non-EC2 server as a file origin.

AWS Lambda@Edge. Working essentially just like AWS Lambda, Lambda@Edge functions run at CloudFront edge locations. This means the code of Lambda@Edge functions runs closer to where your users are located, and as a result you can provide a faster, smoother experience to your website visitors or application users. The functions on Lambda@Edge can “intercept” the requests and the responses between CloudFront and the origin of the data, as well as between CloudFront and the end user.

AWS Elemental MediaStore and MediaPackage. CloudFront supports AWS MediaStore and MediaPackage as origins for existing or live video content, which is then distributed to the end users using CloudFront endpoints.

Amazon CloudWatch. Every CloudFront distribution emits Amazon CloudFront metrics for metrics such as total number of requests, error rates, and Lambda@Edge throttles and execution errors. These metrics are available with no additional configuration and don’t count against CloudWatch limits, making it a good starting point for monitoring your CloudFront usage.

Использование Amazon CloudFront содержимого кэша

Amazon CloudFront может ускорить содержание контента через кэш края. Запрос будет направлен в ближайшее положение края CloudFront, когда пользователь получает доступ к веб-сайта или приложения и запросы контента. Только для первого пользователя, есть задержка при получении содержимого, и все последующие пользователи одного и того же содержания будет иметь возможность быстро извлекать содержимое, так как содержание будет кэшируется в положении края.

Ниже это процесс запроса запроса пользователя:

- CloudFront проверит требуемый объект в кэше. Если запрашиваемый объект находится в кэше, то она возвращает его.

- Если вы не можете найти требуемый объект в кэше CloudFront,

Этот запрос будет перенаправлен к конфигурацииОригинальный сервер 。

CloudFront кэширует объект, возвращенный из сервера происхождения, а затем возвращается к пользователю.

Можно кэшировать объекты в CloudFront, как сконфигурирован TTL (время жизни), и после того, как истечет TTL, объект больше не будет доступен из кэша, как показано на рисунке 2.

фигура 2:Использование CloudFront Cache Image Content